opencv/samples/cpp/generic_descriptor_match.cpp at 2.4 · opencv/opencv

samples\cpp\generic_descriptor_match.cpp 多种描述符匹配算法,提取图像特征点

#include "opencv2/opencv_modules.hpp"

#include <cstdio>

// 检查是否包含OpenCV的非自由模块,如果没有则输出错误信息

#ifndef HAVE_OPENCV_NONFREE

int main(int, char**)

{

printf("The sample requires nonfree module that is not available in your OpenCV distribution.\n");

return -1;

}

#else

// 包含必要的OpenCV头文件

# include "opencv2/opencv_modules.hpp"

# include "opencv2/calib3d/calib3d.hpp"

# include "opencv2/features2d/features2d.hpp"

# include "opencv2/highgui/highgui.hpp"

# include "opencv2/imgproc/imgproc.hpp"

# include "opencv2/nonfree/nonfree.hpp"

using namespace cv;

// 帮助函数,显示程序用法

static void help()

{

printf("使用SURF描述子匹配两幅图像的关键点\n");

printf("格式: \n./generic_descriptor_match <image1> <image2> <algorithm> <XML参数文件>\n");

printf("例如: ./generic_descriptor_match ../c/scene_l.bmp ../c/scene_r.bmp FERN fern_params.xml\n");

}

// 函数声明:绘制关键点匹配结果

Mat DrawCorrespondences(const Mat& img1, const vector<KeyPoint>& features1, const Mat& img2,

const vector<KeyPoint>& features2, const vector<DMatch>& desc_idx);

int main(int argc, char** argv)

{

// 检查参数数量,不正确则显示帮助信息

if (argc != 5)

{

help();

return 0;

}

// 从命令行参数获取输入信息

std::string img1_name = std::string(argv[1]);

std::string img2_name = std::string(argv[2]);

std::string alg_name = std::string(argv[3]); // 匹配算法名称,如FERN

std::string params_filename = std::string(argv[4]); // 参数文件路径

// 创建通用描述子匹配器,根据算法名称和参数文件初始化

Ptr<GenericDescriptorMatcher> descriptorMatcher = GenericDescriptorMatcher::create(alg_name, params_filename);

if( descriptorMatcher == 0 )

{

printf ("无法创建描述子匹配器\n");

return 0;

}

// 读取两张输入图像,转为灰度图

Mat img1 = imread(img1_name, CV_LOAD_IMAGE_GRAYSCALE);

Mat img2 = imread(img2_name, CV_LOAD_IMAGE_GRAYSCALE);

// 使用SURF算法提取关键点

SURF surf_extractor(5.0e3); // SURF特征检测器,阈值设为5000

vector<KeyPoint> keypoints1, keypoints2;

// 提取第一幅图像的关键点

surf_extractor(img1, Mat(), keypoints1);

printf("从第一幅图像中提取到 %d 个关键点\n", (int)keypoints1.size());

// 提取第二幅图像的关键点

surf_extractor(img2, Mat(), keypoints2);

printf("从第二幅图像中提取到 %d 个关键点\n", (int)keypoints2.size());

// 使用描述子匹配器进行关键点匹配

vector<DMatch> matches2to1; // 存储匹配结果,从img2到img1的匹配

printf("正在寻找最近邻匹配... \n");

descriptorMatcher->match( img2, keypoints2, img1, keypoints1, matches2to1 );

printf("匹配完成\n");

// 绘制匹配结果并显示

Mat img_corr = DrawCorrespondences(img1, keypoints1, img2, keypoints2, matches2to1);

imshow("correspondences", img_corr);

waitKey(0); // 等待按键

}

// 函数定义:绘制两幅图像的关键点及匹配连线

Mat DrawCorrespondences(const Mat& img1, const vector<KeyPoint>& features1, const Mat& img2,

const vector<KeyPoint>& features2, const vector<DMatch>& desc_idx)

{

// 创建足够大的画布,将两幅图像并排放置

Mat img_corr(Size(img1.cols + img2.cols, MAX(img1.rows, img2.rows)), CV_8UC3);

img_corr = Scalar::all(0); // 初始化为黑色背景

// 将img1绘制在画布左侧

Mat part = img_corr(Rect(0, 0, img1.cols, img1.rows));

cvtColor(img1, part, COLOR_GRAY2RGB); // 转为彩色

// 将img2绘制在画布右侧

part = img_corr(Rect(img1.cols, 0, img2.cols, img2.rows));

cvtColor(img2, part, COLOR_GRAY2RGB);

// 在img1的关键点位置绘制红色圆圈

for (size_t i = 0; i < features1.size(); i++)

{

circle(img_corr, features1[i].pt, 3, Scalar(0, 0, 255), -1);

}

// 在img2的关键点位置绘制红色圆圈,并连线到img1中的匹配点

for (size_t i = 0; i < features2.size(); i++)

{

// 计算img2关键点在画布上的位置(右侧)

Point pt(cvRound(features2[i].pt.x + img1.cols), cvRound(features2[i].pt.y));

circle(img_corr, pt, 3, Scalar(0, 0, 255), -1); // 绘制红色圆圈

// 绘制从img1到img2的绿色连线

line(img_corr, features1[desc_idx[i].trainIdx].pt, pt, Scalar(0, 255, 0));

}

return img_corr;

}

#endif // HAVE_OPENCV_NONFREE这个示例文件演示了如何使用OpenCV中的通用描述子匹配器(Generic Descriptor Matcher)对两幅图像进行特征点匹配,主要功能包括:

- 使用SURF算法从两幅图像提取关键点

- 使用指定的匹配算法(如FERN)匹配两幅图像之间的关键点

- 可视化显示匹配结果:将两幅图像并排显示并用线条连接匹配的特征点

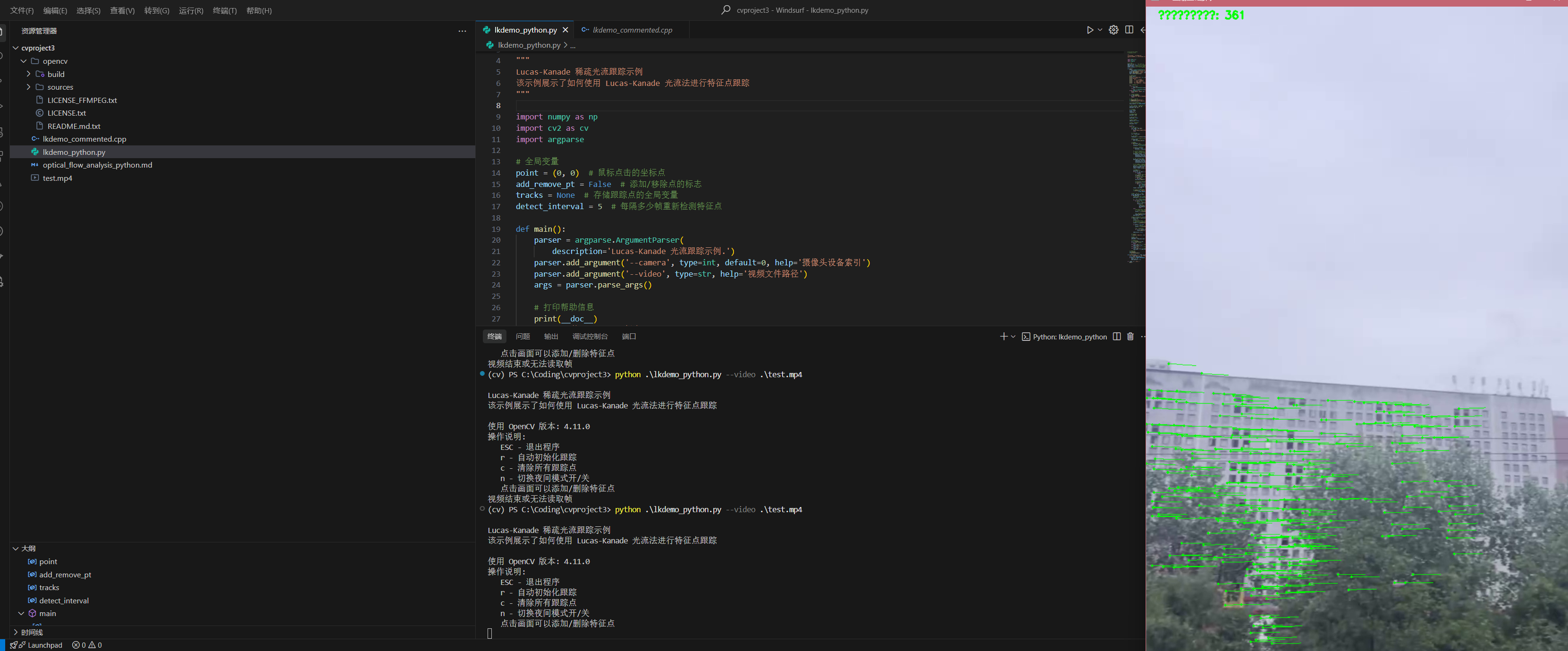

我尝试编译运行 发现多种问题

先是老版本Opencv的CMakeLists不支持最新版的Cmake4+

于是我尝试使用旧版Cmake3.10+

发现在编译时,我的cpp编译套件版本又过高.

得出结论 如果没有配套虚拟环境 难以复现老版本opencv的sample

遂放弃旧版,学习新版本

import cv2

import numpy as np

# 读取图片

img1 = cv2.imread('img1.jpg', cv2.IMREAD_GRAYSCALE)

img2 = cv2.imread('img2.jpg', cv2.IMREAD_GRAYSCALE)

if img1 is None or img2 is None:

print('请确保 demo 目录下有 img1.jpg 和 img2.jpg 两张图片!')

exit(1)

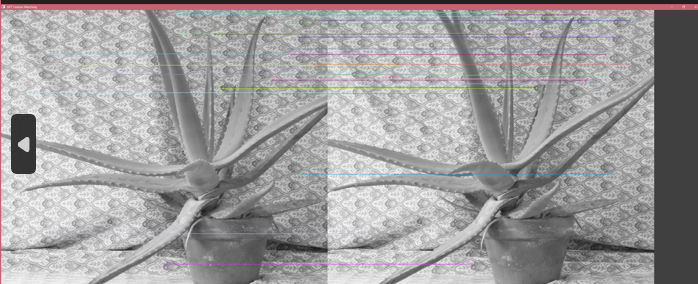

# SIFT 特征点匹配

def sift_match(img1, img2):

if not hasattr(cv2, 'SIFT_create'):

print('当前 OpenCV 不支持 SIFT')

return None

sift = cv2.SIFT_create()

kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)

matcher = cv2.BFMatcher(cv2.NORM_L2, crossCheck=True)

matches = matcher.match(des1, des2)

matches = sorted(matches, key=lambda x: x.distance)

img_matches = cv2.drawMatches(img1, kp1, img2, kp2, matches[:30], None, flags=2)

return img_matches

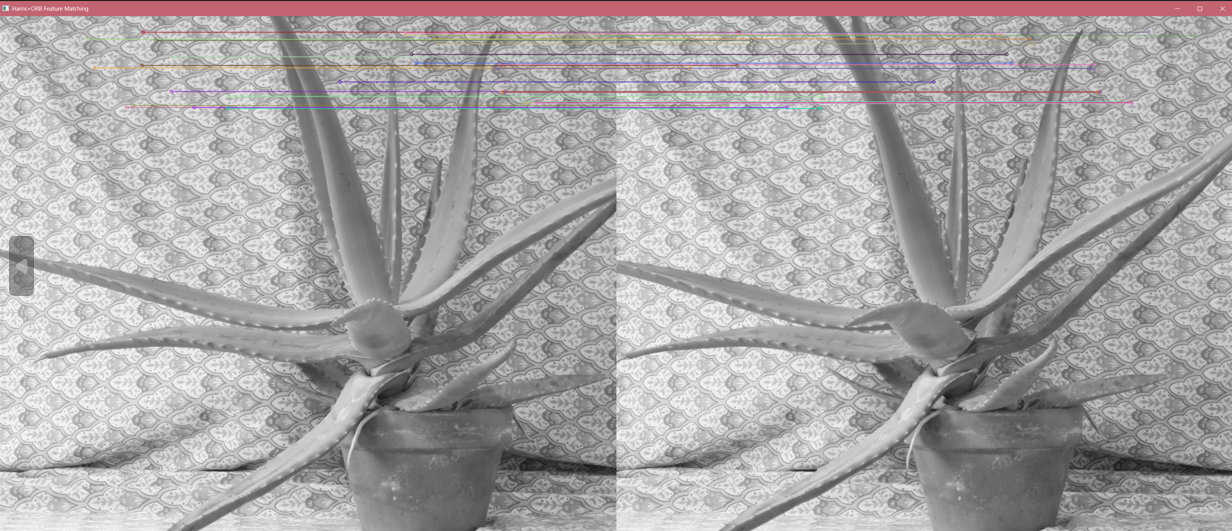

# Harris + ORB 描述子匹配

def harris_orb_match(img1, img2):

orb = cv2.ORB_create()

# Harris 角点检测

def get_harris_keypoints(img):

harris = cv2.cornerHarris(np.float32(img), 2, 3, 0.04)

harris = cv2.dilate(harris, None)

thresh = 0.01 * harris.max()

keypoints = np.argwhere(harris > thresh)

# 转为 cv2.KeyPoint

keypoints = [cv2.KeyPoint(float(pt[1]), float(pt[0]), 3) for pt in keypoints]

return keypoints

kp1 = get_harris_keypoints(img1)

kp2 = get_harris_keypoints(img2)

# 用 ORB 计算描述子

kp1, des1 = orb.compute(img1, kp1)

kp2, des2 = orb.compute(img2, kp2)

matcher = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

matches = matcher.match(des1, des2)

matches = sorted(matches, key=lambda x: x.distance)

img_matches = cv2.drawMatches(img1, kp1, img2, kp2, matches[:30], None, flags=2)

return img_matches

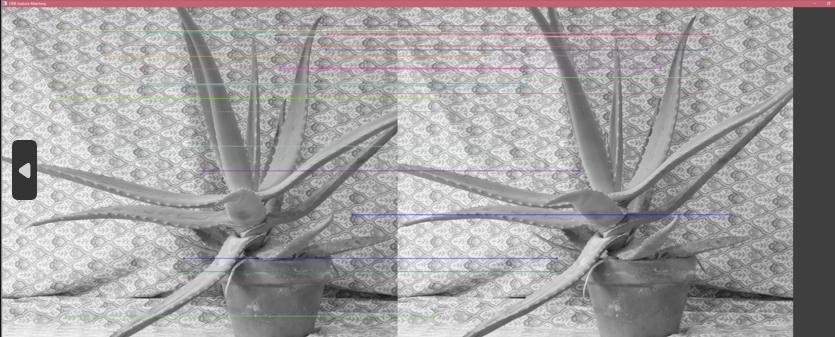

# ORB 特征点匹配

def orb_match(img1, img2):

orb = cv2.ORB_create()

kp1, des1 = orb.detectAndCompute(img1, None)

kp2, des2 = orb.detectAndCompute(img2, None)

matcher = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

matches = matcher.match(des1, des2)

matches = sorted(matches, key=lambda x: x.distance)

img_matches = cv2.drawMatches(img1, kp1, img2, kp2, matches[:30], None, flags=2)

return img_matches

# 展示匹配结果

sift_img = sift_match(img1, img2)

if sift_img is not None:

cv2.imshow('SIFT Feature Matching', sift_img)

harris_img = harris_orb_match(img1, img2)

cv2.imshow('Harris+ORB Feature Matching', harris_img)

orb_img = orb_match(img1, img2)

cv2.imshow('ORB Feature Matching', orb_img)

cv2.waitKey(0)

cv2.destroyAllWindows()img1

img2

results

Haris+ORB

SIFT

ORB

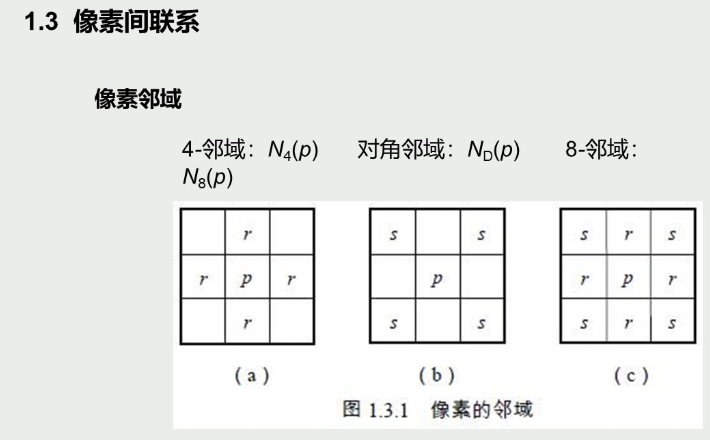

三种特征点匹配算法的详细阐述(含代码对照)

SIFT算法

SIFT(Scale-Invariant Feature Transform,尺度不变特征变换)是一种强大的特征检测与描述算法:

基本原理:

- 通过构建高斯差分金字塔(DoG)检测尺度空间中的极值点

- 对特征点位置进行精确定位和筛选

- 为每个关键点分配主方向,确保旋转不变性

- 生成具有128维的特征描述子

代码实现:

# 创建SIFT特征检测器

sift = cv2.SIFT_create()

# 检测特征点并计算描述子

kp1, des1 = sift.detectAndCompute(img1, None) # 第一张图片的关键点和描述子

kp2, des2 = sift.detectAndCompute(img2, None) # 第二张图片的关键点和描述子主要特点:

- 对图像缩放、旋转、亮度变化和视角变化具有很强的鲁棒性

- 特征点分布均匀,重复性好

- 描述子具有较强的区分性

匹配方式:

# 创建暴力匹配器 - 使用L2范数(欧氏距离)进行特征向量匹配,crossCheck=True确保匹配的唯一性

matcher = cv2.BFMatcher(cv2.NORM_L2, crossCheck=True)

# 进行特征匹配

matches = matcher.match(des1, des2)- 使用欧氏距离(L2范数)计算特征向量间的相似度

- 特征向量距离越小,匹配度越高

优缺点:

- 优点:匹配精度高,鲁棒性强

- 缺点:计算复杂度高,实时性较差,有专利限制

ORB算法

ORB(Oriented FAST and Rotated BRIEF)是一种高效的特征检测与描述算法:

基本原理:

- 结合FAST角点检测算法和改进的BRIEF描述子

- 使用图像金字塔实现多尺度特征检测

- 通过灰度质心法计算关键点的方向,确保旋转不变性

- 生成二进制特征描述子

代码实现:

# 创建ORB特征检测器

orb = cv2.ORB_create()

# 检测特征点并计算描述子

kp1, des1 = orb.detectAndCompute(img1, None) # 第一张图片的关键点和描述子

kp2, des2 = orb.detectAndCompute(img2, None) # 第二张图片的关键点和描述子主要特点:

- 计算效率非常高,适合实时应用

- 生成的是二进制描述子,占用内存小

- 对一定程度的旋转、尺度变化有鲁棒性

匹配方式:

# 创建暴力匹配器 - 使用汉明距离进行ORB二进制描述子的匹配

matcher = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

# 进行特征匹配

matches = matcher.match(des1, des2)

# 按照距离排序

matches = sorted(matches, key=lambda x: x.distance)- 使用汉明距离计算二进制描述子间的相似度

- 汉明距离计算效率高(计算不同位的数量)

优缺点:

- 优点:速度快,无专利限制,适合资源受限设备

- 缺点:对大尺度变化和视角变化的鲁棒性不如SIFT

Harris角点 + ORB描述子

这是一种混合方法,结合了Harris角点检测器和ORB描述子:

基本原理:

- 使用Harris算法检测角点(图像中梯度变化显著的区域)

- 采用ORB算法计算这些角点的描述子

- 结合两种算法的优势,实现特征点匹配

代码实现 – Harris角点检测:

# 使用cornerHarris函数检测角点

# 参数2是blockSize,表示角点检测的邻域大小

# 参数3是ksize,表示Sobel算子的孔径大小

# 参数0.04是Harris检测器的自由参数

harris = cv2.cornerHarris(np.float32(img), 2, 3, 0.04)

# 膨胀角点,增强显示效果

harris = cv2.dilate(harris, None)

# 设置阈值,只保留显著的角点

thresh = 0.01 * harris.max()Harris角点转换为KeyPoint:

# 获取超过阈值的角点坐标

keypoints = np.argwhere(harris > thresh)

# 将角点坐标转换为cv2.KeyPoint对象,便于后续处理

# 注意坐标系转换:图像坐标是(行,列),而KeyPoint需要(x,y)即(列,行)

keypoints = [cv2.KeyPoint(float(pt[1]), float(pt[0]), 3) for pt in keypoints]使用ORB计算描述子:

# 使用ORB计算Harris角点的描述子

# ORB描述子是二进制描述子,计算效率高

kp1, des1 = orb.compute(img1, kp1) # 计算第一张图片角点的ORB描述子主要特点:

- Harris角点检测对旋转具有不变性,但对尺度变化较敏感

- 通过阈值筛选显著的角点,提高特征点的质量

- ORB描述子计算效率高,生成二进制描述子

匹配方式:

# 创建暴力匹配器 - 使用汉明距离进行二进制描述子的匹配

matcher = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

# 进行特征匹配

matches = matcher.match(des1, des2)

# 按照距离排序

matches = sorted(matches, key=lambda x: x.distance)- 同样使用汉明距离进行二进制描述子的匹配

- 通过距离排序筛选最佳匹配点对

优缺点:

- 优点:特征点定位准确性较好,描述子计算效率高

- 缺点:对尺度变化的适应性不足,特征点数量可能受限于Harris检测器参数

算法比较

这三种算法各有优缺点,适用于不同的应用场景:

- SIFT适合对精度要求高、计算资源充足的场景

- ORB适合需要实时处理、计算资源有限的场景

- Harris+ORB是一种折中方案,在某些特定场景可能提供更好的平衡

# 展示匹配结果 # 计算并显示三种不同特征点匹配算法的结果 sift_img = sift_match(img1, img2) if sift_img is not None: cv2.imshow(‘SIFT Feature Matching’, sift_img) # 显示SIFT匹配结果 harris_img = harris_orb_match(img1, img2) cv2.imshow(‘Harris+ORB Feature Matching’, harris_img) # 显示Harris+ORB匹配结果