实验目的

众所周知,人类自然语言中包含了丰富的情感色彩:表达人的情绪(如悲伤、快乐)、表达人的心情(如倦怠、忧郁)、表达人的喜好(如喜欢、讨厌)、表达人的个性特征和表达人的立场等等。 情感分析在商品喜好、消费决策、舆情分析等场景中均有应用。利用机器自动分析这些情感倾向,不但有助于帮助企业了解消费者对其产品的感受,为产品改进提供依据;同时还有助于企业分析商业伙伴们的态度,以便更好地进行商业决策。

学习对带有感情色彩的主观性文本进行分析、处理、归纳和推理的过程。

使用仪器、材料

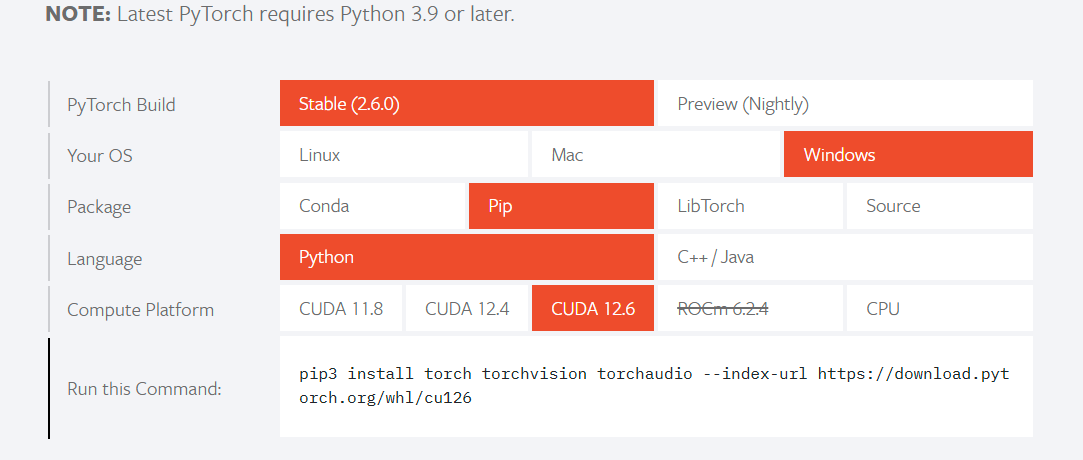

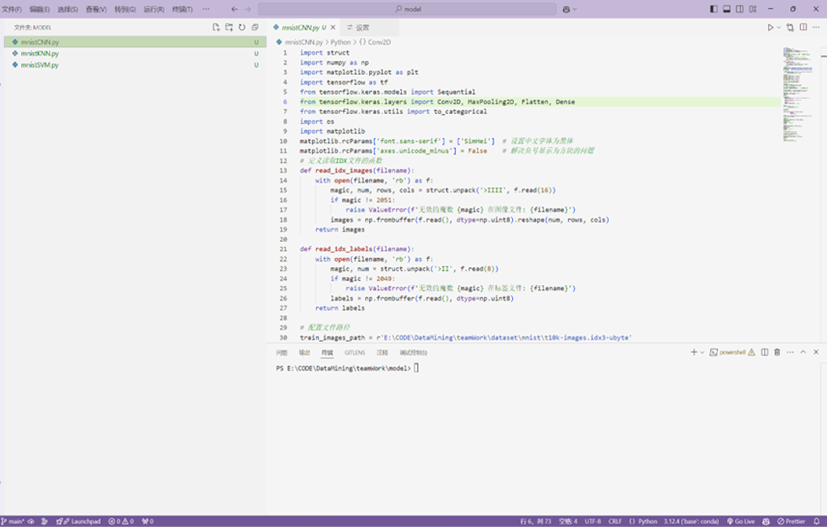

环境:Python 3.12.4 (Anaconda3)

开发工具:Visual Studio Code

实验过程原始记录(数据、图表、计算等)

理论和实践参考:用户情感分析.ipynb

部分接口依据utils.py

思路:利用python库 PaddleNLP进行用户情感分析。先将训练集train.txt利用python格式化(初步处理得到的formatted_train.txt);再将其导入开始训练模型。最后用格式化好的(formatted_test.txt)来测试模型质量

utils.py

import numpy as np

import paddle

import paddle.nn.functional as F

from paddlenlp.data import Stack, Tuple, Pad

def predict(model, data, tokenizer, label_map, batch_size=1):

"""

Predicts the data labels.

Args:

model (obj:`paddle.nn.Layer`): A model to classify texts.

data (obj:`List(Example)`): The processed data whose each element is a Example (numedtuple) object.

A Example object contains `text`(word_ids) and `se_len`(sequence length).

tokenizer(obj:`PretrainedTokenizer`): This tokenizer inherits from :class:`~paddlenlp.transformers.PretrainedTokenizer`

which contains most of the methods. Users should refer to the superclass for more information regarding methods.

label_map(obj:`dict`): The label id (key) to label str (value) map.

batch_size(obj:`int`, defaults to 1): The number of batch.

Returns:

results(obj:`dict`): All the predictions labels.

"""

examples = []

for text in data:

input_ids, segment_ids = convert_example(

text,

tokenizer,

max_seq_length=128,

is_test=True)

examples.append((input_ids, segment_ids))

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id), # input id

Pad(axis=0, pad_val=tokenizer.pad_token_id), # segment id

): fn(samples)

# Seperates data into some batches.

batches = []

one_batch = []

for example in examples:

one_batch.append(example)

if len(one_batch) == batch_size:

batches.append(one_batch)

one_batch = []

if one_batch:

# The last batch whose size is less than the config batch_size setting.

batches.append(one_batch)

results = []

model.eval()

for batch in batches:

input_ids, segment_ids = batchify_fn(batch)

input_ids = paddle.to_tensor(input_ids)

segment_ids = paddle.to_tensor(segment_ids)

logits = model(input_ids, segment_ids)

probs = F.softmax(logits, axis=1)

idx = paddle.argmax(probs, axis=1).numpy()

idx = idx.tolist()

labels = [label_map[i] for i in idx]

results.extend(labels)

return results

@paddle.no_grad()

def evaluate(model, criterion, metric, data_loader):

"""

Given a dataset, it evals model and computes the metric.

Args:

model(obj:`paddle.nn.Layer`): A model to classify texts.

data_loader(obj:`paddle.io.DataLoader`): The dataset loader which generates batches.

criterion(obj:`paddle.nn.Layer`): It can compute the loss.

metric(obj:`paddle.metric.Metric`): The evaluation metric.

"""

model.eval()

metric.reset()

losses = []

for batch in data_loader:

input_ids, token_type_ids, labels = batch

logits = model(input_ids, token_type_ids)

loss = criterion(logits, labels)

losses.append(loss.numpy())

correct = metric.compute(logits, labels)

metric.update(correct)

accu = metric.accumulate()

print("eval loss: %.5f, accu: %.5f" % (np.mean(losses), accu))

model.train()

metric.reset()

def convert_example(example, tokenizer, max_seq_length=512, is_test=False):

"""

Builds model inputs from a sequence or a pair of sequence for sequence classification tasks

by concatenating and adding special tokens. And creates a mask from the two sequences passed

to be used in a sequence-pair classification task.

A BERT sequence has the following format:

- single sequence: ``[CLS] X [SEP]``

- pair of sequences: ``[CLS] A [SEP] B [SEP]``

A BERT sequence pair mask has the following format:

::

0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1

| first sequence | second sequence |

If only one sequence, only returns the first portion of the mask (0's).

Args:

example(obj:`list[str]`): List of input data, containing text and label if it have label.

tokenizer(obj:`PretrainedTokenizer`): This tokenizer inherits from :class:`~paddlenlp.transformers.PretrainedTokenizer`

which contains most of the methods. Users should refer to the superclass for more information regarding methods.

max_seq_len(obj:`int`): The maximum total input sequence length after tokenization.

Sequences longer than this will be truncated, sequences shorter will be padded.

is_test(obj:`False`, defaults to `False`): Whether the example contains label or not.

Returns:

input_ids(obj:`list[int]`): The list of token ids.

token_type_ids(obj: `list[int]`): List of sequence pair mask.

label(obj:`numpy.array`, data type of int64, optional): The input label if not is_test.

"""

encoded_inputs = tokenizer(text=example["text"], max_seq_len=max_seq_length)

input_ids = encoded_inputs["input_ids"]

token_type_ids = encoded_inputs["token_type_ids"]

if not is_test:

label = np.array([example["label"]], dtype="int64")

return input_ids, token_type_ids, label

else:

return input_ids, token_type_ids

def create_dataloader(dataset,

mode='train',

batch_size=1,

batchify_fn=None,

trans_fn=None):

if trans_fn:

dataset = dataset.map(trans_fn)

shuffle = True if mode == 'train' else False

if mode == 'train':

batch_sampler = paddle.io.DistributedBatchSampler(

dataset, batch_size=batch_size, shuffle=shuffle)

else:

batch_sampler = paddle.io.BatchSampler(

dataset, batch_size=batch_size, shuffle=shuffle)

return paddle.io.DataLoader(

dataset=dataset,

batch_sampler=batch_sampler,

collate_fn=batchify_fn,

return_list=True)go.py

import paddle

import paddlenlp as ppnlp

from paddlenlp.data import Stack, Tuple, Pad

from paddlenlp.datasets import load_dataset

from utils import create_dataloader, convert_example, evaluate, predict

from tqdm import tqdm # 导入tqdm库

# 设置设备为CPU

device = paddle.set_device('cpu')

def read(data_path):

data = ['label' + '\t' + 'text_a\n']

with open(data_path, 'r', encoding='utf-8-sig') as f:

lines = f.readlines()

# 三行为一条记录

for i in range(int(len(lines) / 3)):

# 读取第一行为内容

word = lines[i * 3].strip('\n')

# 读取第三行为标签

label = lines[i * 3 + 2].strip('\n')

data.append(label + '\t' + word + '\n')

i = i + 1

return data

# 整理训练集和测试集格式

with open('formatted_train.txt', 'w', encoding='utf-8') as f:

f.writelines(read('train.txt'))

with open('formatted_test.txt', 'w', encoding='utf-8') as f:

f.writelines(read('test.txt'))

# 重新定义数据读取函数

def read_formatted(data_path):

with open(data_path, 'r', encoding='utf-8') as f:

next(f) # 跳过列名

for line in f:

label, text = line.strip('\n').split('\t')

yield {'text': text, 'label': int(label)}

# 加载数据集

train_ds = load_dataset(read_formatted, data_path='formatted_train.txt', lazy=False)

test_ds = load_dataset(read_formatted, data_path='formatted_test.txt', lazy=False)

# 定义模型和tokenizer

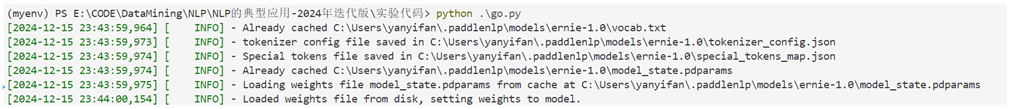

MODEL_NAME = "ernie-1.0"

tokenizer = ppnlp.transformers.ErnieTokenizer.from_pretrained(MODEL_NAME)

model = ppnlp.transformers.ErnieForSequenceClassification.from_pretrained(MODEL_NAME, num_classes=3)

# 数据处理和加载

batch_size = 16 # 减小batch size

trans_func = lambda example: convert_example(example, tokenizer, max_seq_length=64) # 减小max_seq_length

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id), # input id

Pad(axis=0, pad_val=tokenizer.pad_token_type_id), # segment id

Stack(dtype="int64") # label

): fn(samples)

train_data_loader = create_dataloader(train_ds, mode='train', batch_size=batch_size, batchify_fn=batchify_fn, trans_fn=trans_func)

test_data_loader = create_dataloader(test_ds, mode='test', batch_size=batch_size, batchify_fn=batchify_fn, trans_fn=trans_func)

# 定义优化器和损失函数

num_training_steps = len(train_data_loader) * 1 # 减少训练轮数

lr_scheduler = ppnlp.transformers.LinearDecayWithWarmup(5e-5, num_training_steps, 0.1)

optimizer = paddle.optimizer.AdamW(learning_rate=lr_scheduler, parameters=model.parameters(), weight_decay=0.01)

criterion = paddle.nn.loss.CrossEntropyLoss()

metric = paddle.metric.Accuracy()

# 训练模型

global_step = 0

for epoch in range(1, 2): # 减少训练轮数

with tqdm(total=len(train_data_loader), desc=f"Epoch {epoch}") as pbar: # 添加进度条

for step, batch in enumerate(train_data_loader, start=1):

input_ids, segment_ids, labels = batch

logits = model(input_ids, segment_ids)

loss = criterion(logits, labels)

loss.backward()

optimizer.step()

lr_scheduler.step()

optimizer.clear_grad()

global_step += 1

if global_step % 10 == 0:

print(f"global step {global_step}, epoch: {epoch}, batch: {step}, loss: {loss.numpy()}")

pbar.update(1) # 更新进度条

# 评估模型

evaluate(model, criterion, metric, test_data_loader)

# 预测

data = [

{"text": "这是一条测试数据。"},

{"text": "这是一条负面情绪的数据。"}

]

label_map = {0: '负面', 1: '中立', 2: '正面'}

results = predict(model, data, tokenizer, label_map, batch_size=1)

for idx, text in enumerate(data):

print(f"预测: {text['text']} \t 情感: {results[idx]}")运行结果

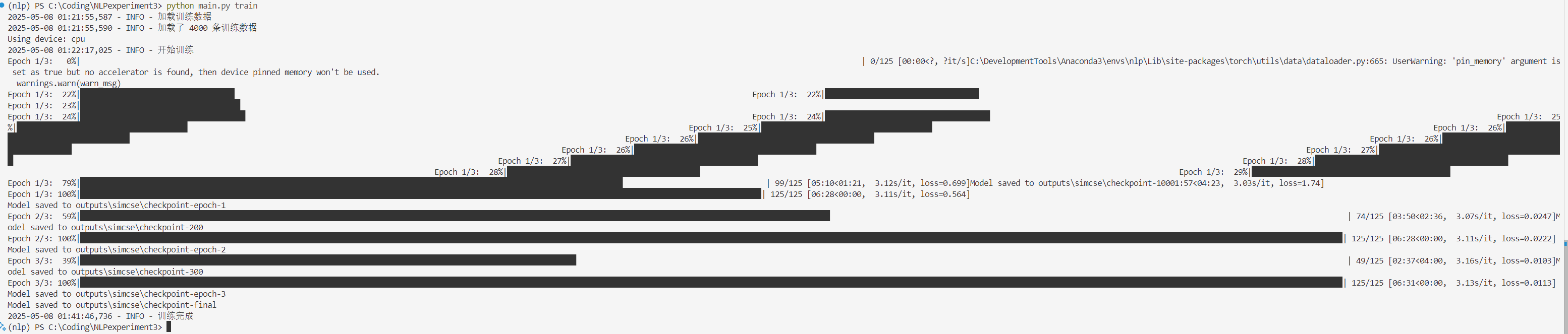

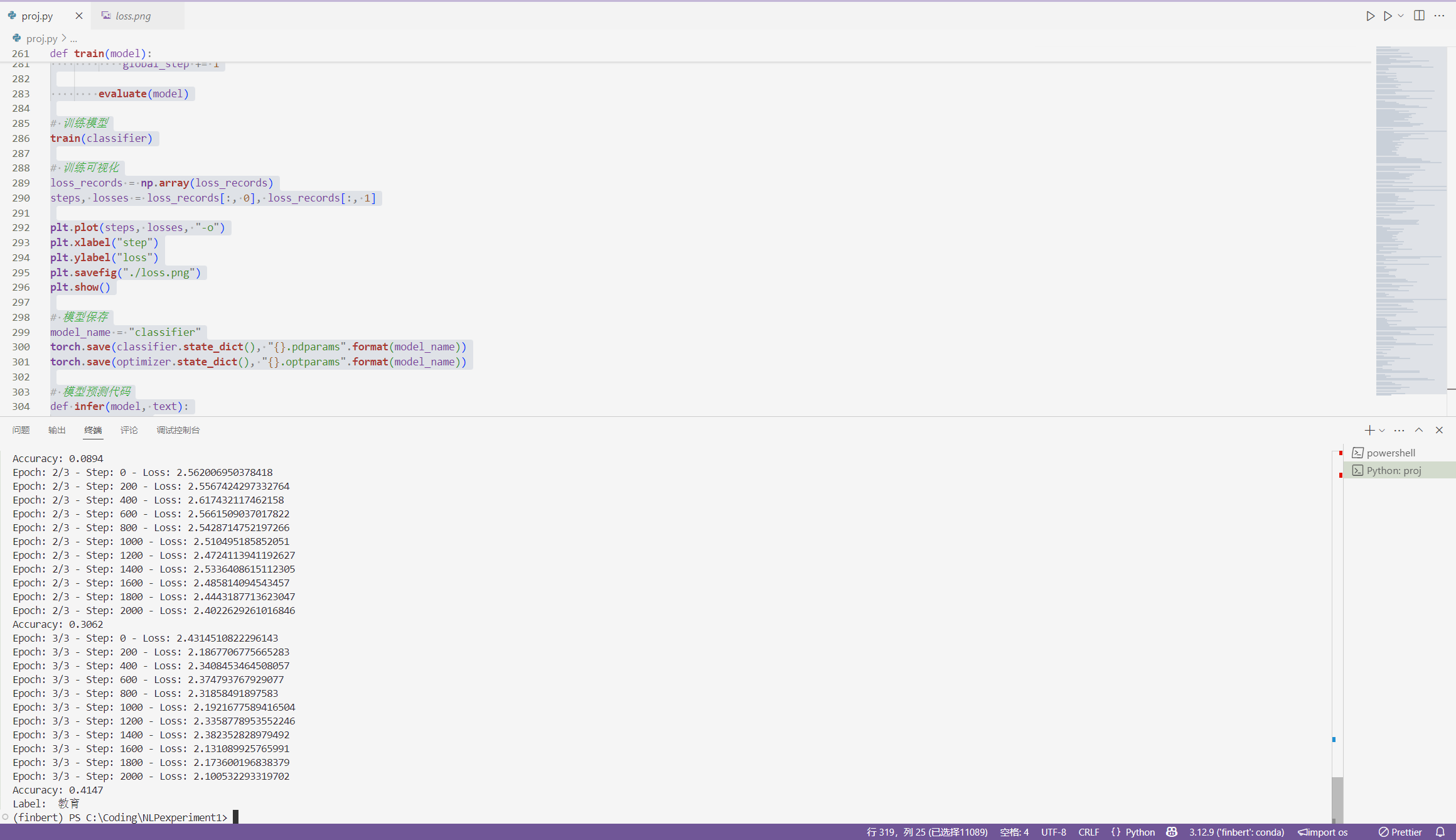

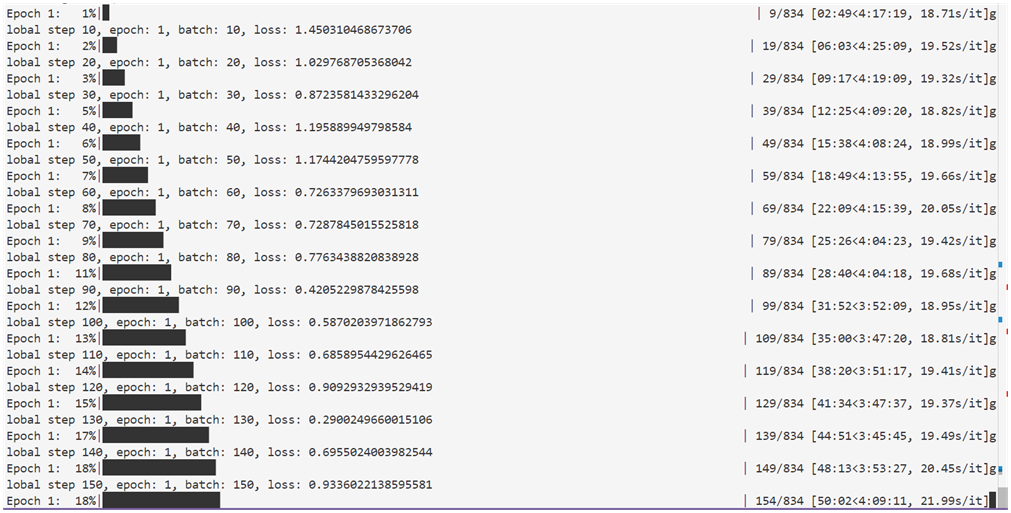

部分图片

终端输出

Epoch 1: 1%|█ | 9/834 [02:49<4:17:19, 18.71s/it]global step 10, epoch: 1, batch: 10, loss: 1.450310468673706

Epoch 1: 2%|██▏ | 19/834 [06:03<4:25:09, 19.52s/it]global step 20, epoch: 1, batch: 20, loss: 1.029768705368042

Epoch 1: 3%|███▎ | 29/834 [09:17<4:19:09, 19.32s/it]global step 30, epoch: 1, batch: 30, loss: 0.8723581433296204

lobal step 30, epoch: 1, batch: 30, loss: 0.8723581433296204

Epoch 1: 5%|████▍ | 39/834 [12:25<4:09:20, 18.82s/it]gEpoch 1: 5%|████▍ | 39/834 [12:25<4:09:20, 18.82s/it]global step 40, epoch: 1, batch: 40, loss: 1.195889949798584

Epoch 1: 6%|█████▌ | 49/834 [15:38<4:08:24, 18.99s/it]global step 40, epoch: 1, batch: 40, loss: 1.195889949798584

Epoch 1: 6%|█████▌ | 49/834 [15:38<4:08:24, 18.99s/it]global step 50, epoch: 1, batch: 50, loss: 1.1744204759597778

Epoch 1: 7%|██████▋ | 59/834 [18:49<4:13:55, 19.66s/it]global step 60, epoch: 1, batch: 60, loss: 0.7263379693031311

Epoch 1: 6%|█████▌ | 49/834 [15:38<4:08:24, 18.99s/it]global step 50, epoch: 1, batch: 50, loss: 1.1744204759597778

Epoch 1: 7%|██████▋ | 59/834 [18:49<4:13:55, 19.66s/it]global step 60, epoch: 1, batch: 60, loss: 0.7263379693031311

Epoch 1: 8%|███████▊ | 69/834 [22:09<4:15:39, 20.05s/it]global step 50, epoch: 1, batch: 50, loss: 1.1744204759597778

Epoch 1: 7%|██████▋ | 59/834 [18:49<4:13:55, 19.66s/it]global step 60, epoch: 1, batch: 60, loss: 0.7263379693031311

Epoch 1: 8%|███████▊ | 69/834 [22:09<4:15:39, 20.05s/it]global step 70, epoch: 1, batch: 70, loss: 0.7287845015525818

lobal step 60, epoch: 1, batch: 60, loss: 0.7263379693031311

Epoch 1: 8%|███████▊ | 69/834 [22:09<4:15:39, 20.05s/it]global step 70, epoch: 1, batch: 70, loss: 0.7287845015525818

Epoch 1: 9%|████████▉ | 79/834 [25:26<4:04:23, 19.42s/it]gEpoch 1: 8%|███████▊ | 69/834 [22:09<4:15:39, 20.05s/it]global step 70, epoch: 1, batch: 70, loss: 0.7287845015525818

Epoch 1: 9%|████████▉ | 79/834 [25:26<4:04:23, 19.42s/it]global step 70, epoch: 1, batch: 70, loss: 0.7287845015525818

Epoch 1: 9%|████████▉ | 79/834 [25:26<4:04:23, 19.42s/it]global step 80, epoch: 1, batch: 80, loss: 0.7763438820838928

Epoch 1: 11%|██████████ | 89/834 [28:40<4:04:18, 19.68s/it]gEpoch 1: 9%|████████▉ | 79/834 [25:26<4:04:23, 19.42s/it]global step 80, epoch: 1, batch: 80, loss: 0.7763438820838928

Epoch 1: 11%|██████████ | 89/834 [28:40<4:04:18, 19.68s/it]global step 80, epoch: 1, batch: 80, loss: 0.7763438820838928

Epoch 1: 11%|██████████ | 89/834 [28:40<4:04:18, 19.68s/it]global step 90, epoch: 1, batch: 90, loss: 0.4205229878425598

Epoch 1: 12%|███████████▏ | 99/834 [31:52<3:52:09, 18.95s/it]global step 100, epoch: 1, batch: 100, loss: 0.5870203971862793

Epoch 1: 11%|██████████ | 89/834 [28:40<4:04:18, 19.68s/it]global step 90, epoch: 1, batch: 90, loss: 0.4205229878425598

Epoch 1: 12%|███████████▏ | 99/834 [31:52<3:52:09, 18.95s/it]global step 100, epoch: 1, batch: 100, loss: 0.5870203971862793

Epoch 1: 13%|████████████▏ | 109/834 [35:00<3:47:20, 18.81s/it]global step 110, epoch: 1, batch: 110, loss: 0.6858954429626465

lobal step 90, epoch: 1, batch: 90, loss: 0.4205229878425598

Epoch 1: 12%|███████████▏ | 99/834 [31:52<3:52:09, 18.95s/it]global step 100, epoch: 1, batch: 100, loss: 0.5870203971862793

Epoch 1: 13%|████████████▏ | 109/834 [35:00<3:47:20, 18.81s/it]global step 110, epoch: 1, batch: 110, loss: 0.6858954429626465

lobal step 100, epoch: 1, batch: 100, loss: 0.5870203971862793

Epoch 1: 13%|████████████▏ | 109/834 [35:00<3:47:20, 18.81s/it]global step 110, epoch: 1, batch: 110, loss: 0.6858954429626465

Epoch 1: 14%|█████████████▎ | 119/834 [38:20<3:51:17, 19.41s/it]global step 120, epoch: 1, batch: 120, loss: 0.9092932939529419

Epoch 1: 13%|████████████▏ | 109/834 [35:00<3:47:20, 18.81s/it]global step 110, epoch: 1, batch: 110, loss: 0.6858954429626465

Epoch 1: 14%|█████████████▎ | 119/834 [38:20<3:51:17, 19.41s/it]global step 120, epoch: 1, batch: 120, loss: 0.9092932939529419

Epoch 1: 15%|██████████████▍ | 129/834 [41:34<3:47:37, 19.37s/it]gEpoch 1: 14%|█████████████▎ | 119/834 [38:20<3:51:17, 19.41s/it]global step 120, epoch: 1, batch: 120, loss: 0.9092932939529419

Epoch 1: 15%|██████████████▍ | 129/834 [41:34<3:47:37, 19.37s/it]global step 120, epoch: 1, batch: 120, loss: 0.9092932939529419

Epoch 1: 15%|██████████████▍ | 129/834 [41:34<3:47:37, 19.37s/it]global step 130, epoch: 1, batch: 130, loss: 0.2900249660015106

Epoch 1: 15%|██████████████▍ | 129/834 [41:34<3:47:37, 19.37s/it]global step 130, epoch: 1, batch: 130, loss: 0.2900249660015106

lobal step 130, epoch: 1, batch: 130, loss: 0.2900249660015106

Epoch 1: 17%|███████████████▌ | 139/834 [44:51<3:45:45, 19.49s/it]global step 140, epoch: 1, batch: 140, loss: 0.6955024003982544

Epoch 1: 18%|████████████████▌ | 149/834 [48:13<3:53:27, 20.45s/it]global step 150, epoch: 1, batch: 150, loss: 0.9336022138595581

Epoch 1: 19%|█████████████████▋ | 159/834 [51:38<3:39:57, 19.55s/it]global step 160, epoch: 1, batch: 160, loss: 0.6705586314201355

Epoch 1: 20%|██████████████████▊ | 169/834 [54:49<3:37:31, 19.63s/it]global step 170, epoch: 1, batch: 170, loss: 0.4619864821434021

Epoch 1: 21%|███████████████████▉ | 179/834 [58:08<3:35:07, 19.71s/it]global step 180, epoch: 1, batch: 180, loss: 1.1729423999786377

Epoch 1: 23%|████████████████████▌ | 189/834 [1:01:26<3:32:45, 19.79s/it]global step 190, epoch: 1, batch: 190, loss: 0.8677840232849121

Epoch 1: 24%|█████████████████████▋ | 199/834 [1:04:42<3:27:09, 19.57s/it]global step 200, epoch: 1, batch: 200, loss: 0.7060407400131226

Epoch 1: 25%|██████████████████████▊ | 209/834 [1:07:57<3:22:27, 19.44s/it]global step 210, epoch: 1, batch: 210, loss: 0.5212971568107605

Epoch 1: 26%|███████████████████████▉ | 219/834 [1:11:12<3:19:37, 19.47s/it]global step 220, epoch: 1, batch: 220, loss: 0.7400115132331848

Epoch 1: 27%|████████████████████████▉ | 229/834 [1:14:29<3:19:45, 19.81s/it]global step 230, epoch: 1, batch: 230, loss: 0.4471248388290405

Epoch 1: 29%|██████████████████████████ | 239/834 [1:17:47<3:14:25, 19.61s/it]global step 240, epoch: 1, batch: 240, loss: 0.9759846925735474

Epoch 1: 30%|███████████████████████████▏ | 249/834 [1:21:02<3:10:19, 19.52s/it]global step 250, epoch: 1, batch: 250, loss: 0.6070269346237183

Epoch 1: 31%|████████████████████████████▎ | 259/834 [1:24:14<3:08:57, 19.72s/it]global step 260, epoch: 1, batch: 260, loss: 0.45137766003608704

Epoch 1: 32%|█████████████████████████████▎ | 269/834 [1:27:31<3:04:33, 19.60s/it]global step 270, epoch: 1, batch: 270, loss: 0.5077657699584961

Epoch 1: 33%|██████████████████████████████▍ | 279/834 [1:30:48<3:03:23, 19.83s/it]global step 280, epoch: 1, batch: 280, loss: 0.7394564151763916

Epoch 1: 35%|███████████████████████████████▌ | 289/834 [1:34:08<2:59:50, 19.80s/it]global step 290, epoch: 1, batch: 290, loss: 1.0885226726531982

Epoch 1: 36%|████████████████████████████████▌ | 299/834 [1:37:25<2:55:30, 19.68s/it]global step 300, epoch: 1, batch: 300, loss: 0.6967673897743225

Epoch 1: 37%|█████████████████████████████████▋ | 309/834 [1:40:42<2:52:07, 19.67s/it]global step 310, epoch: 1, batch: 310, loss: 0.6342006921768188

Epoch 1: 38%|██████████████████████████████████▊ | 319/834 [1:44:02<2:49:57, 19.80s/it]global step 320, epoch: 1, batch: 320, loss: 0.4676714539527893

Epoch 1: 39%|███████████████████████████████████▉ | 329/834 [1:47:12<2:40:04, 19.02s/it]global step 330, epoch: 1, batch: 330, loss: 0.2618354558944702

Epoch 1: 41%|████████████████████████████████████▉ | 339/834 [1:50:27<2:44:09, 19.90s/it]global step 340, epoch: 1, batch: 340, loss: 0.60030198097229

Epoch 1: 42%|██████████████████████████████████████ | 349/834 [1:53:41<2:35:21, 19.22s/it]global step 350, epoch: 1, batch: 350, loss: 0.893867015838623

Epoch 1: 43%|███████████████████████████████████████▏ | 359/834 [1:56:55<2:32:17, 19.24s/it]global step 360, epoch: 1, batch: 360, loss: 0.6859140992164612

Epoch 1: 44%|████████████████████████████████████████▎ | 369/834 [2:00:08<2:29:08, 19.24s/it]global step 370, epoch: 1, batch: 370, loss: 0.7351008057594299

Epoch 1: 45%|█████████████████████████████████████████▎ | 379/834 [2:03:19<2:24:31, 19.06s/it]global step 380, epoch: 1, batch: 380, loss: 0.5324974656105042

Epoch 1: 47%|██████████████████████████████████████████▍ | 389/834 [2:06:25<2:18:07, 18.62s/it]global step 390, epoch: 1, batch: 390, loss: 0.6849534511566162

Epoch 1: 48%|███████████████████████████████████████████▌ | 399/834 [2:09:31<2:15:00, 18.62s/it]global step 400, epoch: 1, batch: 400, loss: 0.1522730588912964

Epoch 1: 49%|████████████████████████████████████████████▋ | 409/834 [2:12:38<2:12:10, 18.66s/it]global step 410, epoch: 1, batch: 410, loss: 0.6080489158630371

Epoch 1: 50%|█████████████████████████████████████████████▋ | 419/834 [2:15:45<2:09:48, 18.77s/it]global step 420, epoch: 1, batch: 420, loss: 0.5999123454093933

Epoch 1: 51%|██████████████████████████████████████████████▊ | 429/834 [2:18:51<2:05:05, 18.53s/it]global step 430, epoch: 1, batch: 430, loss: 0.7193755507469177

Epoch 1: 53%|███████████████████████████████████████████████▉ | 439/834 [2:21:56<2:02:21, 18.59s/it]global step 440, epoch: 1, batch: 440, loss: 0.4685940742492676

Epoch 1: 54%|████████████████████████████████████████████████▉ | 449/834 [2:25:00<1:55:51, 18.06s/it]global step 450, epoch: 1, batch: 450, loss: 0.6924493908882141

Epoch 1: 55%|██████████████████████████████████████████████████ | 459/834 [2:28:05<1:54:37, 18.34s/it]global step 460, epoch: 1, batch: 460, loss: 1.1117645502090454

Epoch 1: 56%|███████████████████████████████████████████████████▏ | 469/834 [2:31:12<1:53:46, 18.70s/it]global step 470, epoch: 1, batch: 470, loss: 0.7002447247505188

Epoch 1: 57%|████████████████████████████████████████████████████▎ | 479/834 [2:34:16<1:49:10, 18.45s/it]global step 480, epoch: 1, batch: 480, loss: 0.29836368560791016

Epoch 1: 59%|█████████████████████████████████████████████████████▎ | 489/834 [2:37:22<1:46:40, 18.55s/it]global step 490, epoch: 1, batch: 490, loss: 0.5593700408935547

Epoch 1: 60%|██████████████████████████████████████████████████████▍ | 499/834 [2:40:27<1:43:47, 18.59s/it]global step 500, epoch: 1, batch: 500, loss: 0.7912716269493103

Epoch 1: 61%|███████████████████████████████████████████████████████▌ | 509/834 [2:43:34<1:40:48, 18.61s/it]global step 510, epoch: 1, batch: 510, loss: 0.3856365382671356

Epoch 1: 62%|████████████████████████████████████████████████████████▋ | 519/834 [2:46:37<1:35:40, 18.22s/it]global step 520, epoch: 1, batch: 520, loss: 0.790695309638977

Epoch 1: 63%|█████████████████████████████████████████████████████████▋ | 529/834 [2:49:43<1:34:50, 18.66s/it]global step 530, epoch: 1, batch: 530, loss: 0.682664155960083

Epoch 1: 65%|██████████████████████████████████████████████████████████▊ | 539/834 [2:52:51<1:32:00, 18.71s/it]global step 540, epoch: 1, batch: 540, loss: 0.8086856603622437

Epoch 1: 66%|███████████████████████████████████████████████████████████▉ | 549/834 [2:56:03<1:35:02, 20.01s/it]global step 550, epoch: 1, batch: 550, loss: 0.3808574378490448

Epoch 1: 67%|████████████████████████████████████████████████████████████▉ | 559/834 [2:59:10<1:25:19, 18.62s/it]global step 560, epoch: 1, batch: 560, loss: 0.6872433423995972

Epoch 1: 68%|██████████████████████████████████████████████████████████████ | 569/834 [3:02:16<1:22:16, 18.63s/it]global step 570, epoch: 1, batch: 570, loss: 0.5966670513153076

Epoch 1: 69%|███████████████████████████████████████████████████████████████▏ | 579/834 [3:05:21<1:18:42, 18.52s/it]global step 580, epoch: 1, batch: 580, loss: 0.7388714551925659

Epoch 1: 71%|████████████████████████████████████████████████████████████████▎ | 589/834 [3:08:22<1:14:11, 18.17s/it]global step 590, epoch: 1, batch: 590, loss: 0.5879547595977783

Epoch 1: 72%|█████████████████████████████████████████████████████████████████▎ | 599/834 [3:11:28<1:13:14, 18.70s/it]global step 600, epoch: 1, batch: 600, loss: 0.5512490272521973

Epoch 1: 73%|██████████████████████████████████████████████████████████████████▍ | 609/834 [3:14:33<1:09:21, 18.50s/it]global step 610, epoch: 1, batch: 610, loss: 0.4542902112007141

Epoch 1: 74%|███████████████████████████████████████████████████████████████████▌ | 619/834 [3:17:39<1:06:12, 18.48s/it]global step 620, epoch: 1, batch: 620, loss: 0.27746593952178955

Epoch 1: 75%|████████████████████████████████████████████████████████████████████▋ | 629/834 [3:20:43<1:03:11, 18.50s/it]global step 630, epoch: 1, batch: 630, loss: 0.43663206696510315

Epoch 1: 77%|█████████████████████████████████████████████████████████████████████▋ | 639/834 [3:23:48<1:00:13, 18.53s/it]globalobal step 640, epoch: 1, batch: 640, loss: 0.23845508694648743

Epoch 1: 78%|████████████████████████████████████████████████████████████████████████▎ | 649/834 [3:26:50<54:21, 17.63s/it]global step 650, epoch: 1, batch: 650, loss: 0.521537721157074

Epoch 1: 79%|█████████████████████████████████████████████████████████████████████████▍ | 659/834 [3:29:55<53:52, 18.47s/it]global step 660, epoch: 1, batch: 660, loss: 0.35173436999320984

Epoch 1: 80%|██████████████████████████████████████████████████████████████████████████▌ | 669/834 [3:32:57<49:50, 18.12s/it]global step 670, epoch: 1, batch: 670, loss: 0.641721248626709

Epoch 1: 81%|███████████████████████████████████████████████████████████████████████████▋ | 679/834 [3:36:02<47:58, 18.57s/it]global step 680, epoch: 1, batch: 680, loss: 0.3030988872051239

Epoch 1: 83%|████████████████████████████████████████████████████████████████████████████▊ | 689/834 [3:39:07<44:45, 18.52s/it]global step 690, epoch: 1, batch: 690, loss: 0.4449141323566437

Epoch 1: 84%|█████████████████████████████████████████████████████████████████████████████▉ | 699/834 [3:42:14<41:52, 18.61s/it]global step 700, epoch: 1, batch: 700, loss: 0.6787422895431519

Epoch 1: 85%|███████████████████████████████████████████████████████████████████████████████ | 709/834 [3:45:18<38:27, 18.46s/it]global step 710, epoch: 1, batch: 710, loss: 0.8012028336524963

Epoch 1: 86%|████████████████████████████████████████████████████████████████████████████████▏ | 719/834 [3:48:23<35:21, 18.45s/it]global step 720, epoch: 1, batch: 720, loss: 0.9163274765014648

Epoch 1: 87%|█████████████████████████████████████████████████████████████████████████████████▎ | 729/834 [3:51:24<32:11, 18.39s/it]global step 730, epoch: 1, batch: 730, loss: 0.5091037750244141

Epoch 1: 89%|██████████████████████████████████████████████████████████████████████████████████▍ | 739/834 [3:54:30<29:24, 18.57s/it]global step 740, epoch: 1, batch: 740, loss: 0.4963628649711609

Epoch 1: 90%|███████████████████████████████████████████████████████████████████████████████████▌ | 749/834 [3:58:05<30:57, 21.86s/it]global step 750, epoch: 1, batch: 750, loss: 0.37903785705566406

Epoch 1: 91%|████████████████████████████████████████████████████████████████████████████████████▋ | 759/834 [4:01:15<23:16, 18.62s/it]global step 760, epoch: 1, batch: 760, loss: 0.7896307706832886

Epoch 1: 92%|█████████████████████████████████████████████████████████████████████████████████████▊ | 769/834 [4:04:19<19:58, 18.44s/it]global step 770, epoch: 1, batch: 770, loss: 0.4996657073497772

Epoch 1: 93%|██████████████████████████████████████████████████████████████████████████████████████▊ | 779/834 [4:07:25<16:56, 18.49s/it]global step 780, epoch: 1, batch: 780, loss: 0.5371623039245605

Epoch 1: 95%|███████████████████████████████████████████████████████████████████████████████████████▉ | 789/834 [4:10:30<13:58, 18.62s/it]global step 790, epoch: 1, batch: 790, loss: 0.28370147943496704

Epoch 1: 96%|█████████████████████████████████████████████████████████████████████████████████████████ | 799/834 [4:13:36<10:48, 18.52s/it]global step 800, epoch: 1, batch: 800, loss: 0.49523141980171204

Epoch 1: 97%|██████████████████████████████████████████████████████████████████████████████████████████▏ | 809/834 [4:16:37<07:29, 17.97s/it]global step 810, epoch: 1, batch: 810, loss: 0.5801178216934204

Epoch 1: 98%|███████████████████████████████████████████████████████████████████████████████████████████▎ | 819/834 [4:19:42<04:37, 18.47s/it]global step 820, epoch: 1, batch: 820, loss: 0.614586353302002

Epoch 1: 99%|████████████████████████████████████████████████████████████████████████████████████████████▍| 829/834 [4:22:40<01:29, 17.97s/it]global step 830, epoch: 1, batch: 830, loss: 0.44454532861709595

Epoch 1: 100%|█████████████████████████████████████████████████████████████████████████████████████████████| 834/834 [4:24:07<00:00, 19.00s/it]

eval loss: 0.53620, accu: 0.76332

预测: 这是一条测试数据。 情感: 中立 预测: 这是一条负面情绪的数据。 情感: 负面